Question: How reliable are the sentiment indicators pertaining to the tweets when the grand jury decision came out (November 25th- present)?

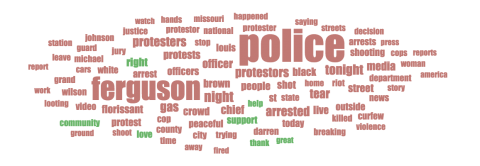

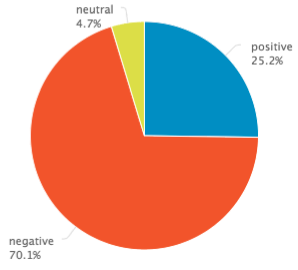

Analysis of word cloud: Just by eyeballing my word cloud it is clear that the majority of the words are negative (red) with only 7 positive (green) words. With further examination in the ‘clusters’ settings I confirmed this, only 25.5% of the words tweeted after the grand jury decision were positive, with the negative comments populating 70.1%. Analyzing the word cloud lead me to believe that while yes, there were common themes; a great deal of negative sentiment during this time, there still was a significant amount of positive tweets entering the twittersphere. This data, however, raised a lot of questions for me. I was interpreting this data and drawing conclusions, but what percent of the positive (green) sentiment words might have been sarcasm, and there for not accurate representations of their supposed sentiment? Could my word cloud be trusted to accurately and reliably reveal the sentiment in tweets relating to Ferguson after the gran jury decision?

Conclusions draw from further investigation: As it turns out, the results of my work differ from my initial impression of the search results shown in my word cloud. The emphasized green/positive aspects of my word cloud format did not always represent what they seemed to at first glance. There were hidden and downplayed information that I was able to uncover by clicking on specific seemingly positive words in my cloud. I clicked on the green/positive word ‘love’. While some of the results did use the word to further strengthen positive messages, other used ‘love’ as a form of sarcasm to add negative tweets to the conversation. Pulsar sees and analyzes the word ‘love’ and assumes the tweet is positive, however, as just expressed this is not always true. Therefor, the data and percentages looking at the overall sentiment in tweets following the grand jury decision are misleading and inaccurate. These findings relate to the issues brought up in the article we read, “Big Data: Methodological Challenges and Approached for Sociological Analysis.” Big data as a whole is incredibly useful but does have flaws. Details can slip through the cracks and important inferences are often not made because they are deemed not relevant, or important, enough to significantly change the outcome of a data set. This was evident when evaluating my word cloud and seeing the clear generalizations that were made in the name of big data and efficiency but that where actually producing inaccuracies in the data.

- Log in to post comments